Researchers tested whether two artificial intelligence systems could develop an ecryption algorithm a third one could not break. In some test runs it worked.

Cryptography and artificial intelligence (AI) are two fascinating branches of computer science. However, they don’t have many contact points. There is a branch of cryptography named “neural cryptography“, but it doesn’t play any role in practice. In the crypto books I know (including the ones I wrote) the term “artificial intelligence” isn’t even mentioned.

Artificial neural networks

Martín Abadi and David G. Andersen from the Google Brain project now have published a research paper that applies artificial intelligence in the field of cryptography. Their idea was as simple as intriguing: Let artificial intelligence create an encryption algorithm and see whether artificial intelligence can break it.

Abadi and Anderson carried out the following experiment: Two artificial intelligence systems, named Alice and Bob, communicated with each other in an encrypted way, while a third one (Eve) was listening and trying to break the encryption. Alice and Bob had to develop their encryption algorithm from the scratch. Eve had to develop her codebreaking abilities without any previous knowledge, too.

All three AI systems involved in the experiment were based on artificial neural networks. An artificial neural network is a computer program that loosely models the human brain. Artificial neural networks have been used successfully for applications like vehicle control, game playing (including Poker and Chess) and pattern recognition. If you take part in a slot car race against an artificial neural network, you almost certainly lose.

An artificial neural network is not programmed in the usual sense. Instead, it is supposed to learn. After an artificial neural network has been fed with some input (e.g the position of a chess game) and after it has delivered some output (e.g. a chess move), some reaction takes place (e.g. it is evaluated whether it was a good move). Based on this reaction, for instance, the network is “punished” or “rewarded”. The reaction leads to a defined reorganisation of the content of the artificial neural network. After thousands of steps (each one including input, output, reaction and reorganisation) the output of an artificial neural network is expected to be close to optimal (e.g. it is expected that the chess move delivered is a good one).

Artificial neural networks producing encryption algortithms

Alice, Bob and Eve were realised as artificial neural networks working as described. Alice and Bob were not told how to encrypt data, or what crypto techniques to use. Instead, they were just given a failure or success message after each message transfer, and then they had to learn from it. Alice, Bob, and Eve all shared the same neural network architecture. The only difference was that Alice and Bob knew a key Eve didn’t have access to.

If Bob’s guess of the plaintext was too far from the original, it was a failure. If Eve’s guess of the plaintext was better than random guessing, it was a failure (for Alice and Bob), too. Likewise, Eve was not told how to break a code. Eve’s output was considered a success, if she guessed more than 50 precent of the plaintext bits correctly (50 percent is what can be achieved by random guesses).

The main question was, whether in the end (after thousands of failures or successes) Alice and Bob would figure out an encryption method that Eve was not able to break.

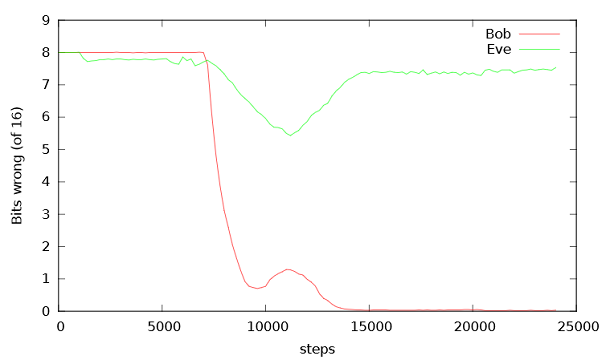

Abadi and Andersen repeated this experiment many times with different parameters. As expected, some runs were a flop, with Bob unable to reconstruct the plaintext. Most of the time, Alice and Bob did manage to evolve a system where they could communicate, but errors happened or Eve was able to guess parts of the message. However, some runs were a success, with Alice and Bob developping an encryption method that worked properly and that Eve could not break. The following diagram shows a successful run (the cleartext was 16 bits long):

As can be seen, Bob could not reconstruct the cleartext in the first 7,000 steps, but then he improved. After about 14,000 steps he had learned how to decrypt Alices message without making any mistakes. On the other hand, Eve could never do better than getting five wrong bits. After 15.000 steps her rate remained steadily at eight guessed bits, which is a 50 percent rate.

Of course, it would be interesting to look at the encryption algorithms Alice and Bob produced in these experiments. However, examining these algorithms was out of the scope of this work. Maybe, this can be done in a future examination.

Further reading: An extraordinary encrypted book: George Orwell’s “1984” enciphered in color

Kommentare (6)